Practical Dependent Types: Type-Safe Neural Networks

Justin Le https://blog.jle.im (justin@jle.im)

Lambdaconf 2017, May 27, 2017

Preface

Slide available at https://talks.jle.im/lambdaconf-2017/dependent-types/dependent-types.html.

All code available at https://github.com/mstksg/talks/tree/master/lambdaconf-2017/dependent-types.

Libraries required: (available on Hackage) hmatrix, singletons, MonadRandom. GHC 8.x assumed.

The Big Question

The big question of Haskell: What can types do for us?

Dependent types are simply the extension of this question, pushing the power of types further.

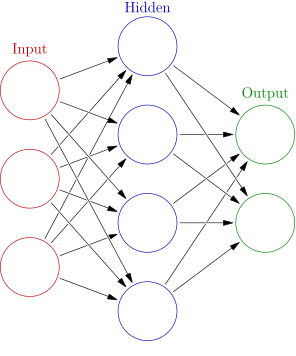

Artificial Neural Networks

Parameterized functions

Each layer receives an input vector, x : ℝn, and produces an output y : ℝm.

They are parameterized by a weight matrix W : ℝm × n (an m × n matrix) and a bias vector b : ℝm, and the result is: (for some activation function f)

y = f(Wx + b)

A neural network would take a vector through many layers.

Networks in Haskell

Running them

Generating them

randomWeights :: MonadRandom m => Int -> Int -> m Weights

randomWeights i o = do

seed1 :: Int <- getRandom

seed2 :: Int <- getRandom

let wB = randomVector seed1 Uniform o * 2 - 1

wN = uniformSample seed2 o (replicate i (-1, 1))

return $ W wB wN

randomNet :: MonadRandom m => Int -> [Int] -> Int -> m Network

randomNet i [] o = O <$> randomWeights i o

randomNet i (h:hs) o = (:~) <$> randomWeights i h <*> randomNet h hs oHaskell Heart Attacks

- What if we mixed up the dimensions for

randomWeights? - What if the user mixed up the dimensions for

randomWeights? - What if layers in the network are incompatible?

- How does the user know what size vector a network expects?

- Is our

runLayerandrunNetimplementation correct?

Backprop

Backprop (Outer layer)

go :: Vector Double -- ^ input vector

-> Network -- ^ network to train

-> (Network, Vector Double)

-- handle the output layer

go !x (O w@(W wB wN))

= let y = runLayer w x

o = logistic y

-- the gradient (how much y affects the error)

-- (logistic' is the derivative of logistic)

dEdy = logistic' y * (o - target)

-- new bias weights and node weights

wB' = wB - scale rate dEdy

wN' = wN - scale rate (dEdy `outer` x)

w' = W wB' wN'

-- bundle of derivatives for next step

dWs = tr wN #> dEdy

in (O w', dWs)Backprop (Inner layer)

-- handle the inner layers

go !x (w@(W wB wN) :~ n)

= let y = runLayer w x

o = logistic y

-- get dWs', bundle of derivatives from rest of the net

(n', dWs') = go o n

-- the gradient (how much y affects the error)

dEdy = logistic' y * dWs'

-- new bias weights and node weights

wB' = wB - scale rate dEdy

wN' = wN - scale rate (dEdy `outer` x)

w' = W wB' wN'

-- bundle of derivatives for next step

dWs = tr wN #> dEdy

in (w' :~ n', dWs)Compiler, O Where Art Thou?

- Haskell is all about the compiler helping guide you write your code. But how much did the compiler help there?

- How can the “shape” of the matrices guide our programming?

- We basically rely on naming conventions to make sure we write our code correctly.

Haskell Red Flags

- How many ways can we write the function and have it still typecheck?

- How many of our functions are partial?

A Typed Alternative

An o x i layer

A Typed Alternative

From HMatrix:

An R 3 is a 3-vector, an L 4 3 is a 4 x 3 matrix.

Data Kinds

With -XDataKinds, all values and types are lifted to types and kinds.

In addition to the values True, False, and the type Bool, we also have the type 'True, 'False, and the kind Bool.

In addition to : and [] and the list type, we have ': and '[] and the list kind.

Data Kinds

A Typed Alternative

Running

runLayer :: (KnownNat i, KnownNat o)

=> Weights i o

-> R i

-> R o

runLayer (W wB wN) v = wB + wN #> v

runNet :: (KnownNat i, KnownNat o)

=> Network i hs o

-> R i

-> R o

runNet (O w) !v = logistic (runLayer w v)

runNet (w :~ n') !v = let v' = logistic (runLayer w v)

in runNet n' v'Exactly the same! No loss in expressivity!

Running

Much better! Matrices and vector lengths are guaranteed to line up!

Generating

randomWeights :: (MonadRandom m, KnownNat i, KnownNat o)

=> m (Weights i o)

randomWeights = do

s1 :: Int <- getRandom

s2 :: Int <- getRandom

let wB = randomVector s1 Uniform * 2 - 1

wN = uniformSample s2 (-1) 1

return $ W wB wNNo need for explicit arguments! User can demand i and o. No reliance on documentation and parameter orders.

Generating

But, for generating nets, we have a problem:

randomNet :: forall m i hs o. (MonadRandom m, KnownNat i, KnownNat o)

=> m (Network i hs o)

randomNet = case hs of [] -> ??Pattern matching on types

The solution for pattern matching on types: singletons.

Pattern matching on types

Random networks

Implicit passing

Explicitly passing singletons can be ugly.

We can now recover the expressivity of the original function, and gain demand-driven shapes.

Backprop

train :: forall i hs o. (KnownNat i, KnownNat o)

=> Double -- ^ learning rate

-> R i -- ^ input vector

-> R o -- ^ target vector

-> Network i hs o -- ^ network to train

-> Network i hs o

train rate x0 target = fst . go x0Ready for this?

Backprop

go :: forall j js. KnownNat j

=> R j -- ^ input vector

-> Network j js o -- ^ network to train

-> (Network j js o, R j)

-- handle the output layer

go !x (O w@(W wB wN))

= let y = runLayer w x

o = logistic y

-- the gradient (how much y affects the error)

-- (logistic' is the derivative of logistic)

dEdy = logistic' y * (o - target)

-- new bias weights and node weights

wB' = wB - konst rate * dEdy

wN' = wN - konst rate * (dEdy `outer` x)

w' = W wB' wN'

-- bundle of derivatives for next step

dWs = tr wN #> dEdy

in (O w', dWs)

-- handle the inner layers

go !x (w@(W wB wN) :~ n)

= let y = runLayer w x

o = logistic y

-- get dWs', bundle of derivatives from rest of the net

(n', dWs') = go o n

-- the gradient (how much y affects the error)

dEdy = logistic' y * dWs'

-- new bias weights and node weights

wB' = wB - konst rate * dEdy

wN' = wN - konst rate * (dEdy `outer` x)

w' = W wB' wN'

-- bundle of derivatives for next step

dWs = tr wN #> dEdy

in (w' :~ n', dWs)Backprop

-- handle the inner layers

go !x (w@(W wB wN) :~ n)

= let y = runLayer w x

o = logistic y

-- get dWs', bundle of derivatives from rest of the net

(n', dWs') = go o n

-- the gradient (how much y affects the error)

dEdy = logistic' y * dWs'

-- new bias weights and node weights

wB' = wB - konst rate * dEdy

wN' = wN - konst rate * (dEdy `outer` x)

w' = W wB' wN'

-- bundle of derivatives for next step

dWs = tr wN #> dEdy

in (w' :~ n', dWs)Surprise! It’s actually identical! No loss in expressivity. Typed holes can write our code for us in many cases. And shapes are all verified.

Type-Driven Development

We wrote an untyped implementation, then realized what was wrong. Then we added types, and everything is great!

Further reading

- Blog series: https://blog.jle.im/entries/series/+practical-dependent-types-in-haskell.html

- Extra resources: